I made a Telegram Sports Curator Bot in Rust

It was a Sunday. I was bored at home. I thought: there must be some sporting event going on. Checked on Google, “what sporting events to watch”. I found many interesting ones: darts, sports racing, table tennis…

I’m a big fan of watching sporting events. It doesn’t matter the sport. If there’s a nice rivalry, or something big on the line, I’m in. I love competition, high stakes. I could watch a poker hand or a bowling event.

But I realised I’m always watching the same sports: NBA, European football, tennis and UFC.

Then I had the idea: how could would it be to have some sort of “sporting events curator”, someone that tells me the best events to watch every week?

Sunday afternoon project starts

I started thinking about what I wanted exactly. Ideally, I would get a notification on Monday about the best events to watch that week.

I did not want this notification to get lost in my email. No. This was WAY more important than job offers, AWS bills, or spam.

I needed a virtual friend.

A virtual entity that would message me, that would disturb me with a notification.

Giving life to the Telegram Sports Curator Bot

The easy answer to my needs: a Telegram bot.

I would love to use WhatsApp, but their API is way more convoluted than Telegram’s. It’s one of those instances where the amount of hoops to jump through makes it a less viable option. Similarly to using the OpenAI API vs the Google Gemini API. This is a Sunday project, we want the fastest and easiest stuff.

In the past couple of years, I’ve been bullish on Rust, the programming language. It’s hard, it’s verbose. It makes you think a lot. The compiler is harsh. In the era of AI, I love going back to difficult, complex domains like Rust. I’ve found it makes me a better developer. Compared to JS, in Rust I have to think about pointers, borrowing, lifetimes; and a slew of other fun stuff.

Since this is a backend-only project, let’s use Rust, let’s put my brain to good use in this slow Sunday afternoon.

Getting our hands dirty

My initial plan:

- A Rust backend will make a POST request using OpenAI API, specifically the

web_searchtool. - In the request, there’s a prompt that says something like: “Find me the best televised sporting events to watch this week. Do not focus on a specific sport or country. Focus on rivalries, high-stakes games, important events no matter the country or context. In every event, tell me the time in CET timezone, and also include a short line about why to watch it”

- Once we get the response, we send the message with the Telegram bot.

- This runs periodically, every Monday, in an AWS Lambda triggered with EventBridge.

This was pretty straightforward. My initial doubts were:

- Is there a framework to make a Telegram bot in Rust?

- How hard is it to deploy Rust code to an AWS Lambda?

- Will the prompt + web search work?

After some research, I was in luck.

There’s an awesome Telegram bot framework for Rust, teloxide.

I even found a framework to build & deploy Rust lambdas to AWS, Cargo Lambda.

Regarding the prompt, it was just a matter of trial and error, But I got it working. I did have to read the OpenAI API Web Search docs, but they were super clear. The response is a deeply nested object, but hey, I’ve been deep into JS Objects since 2015. This was easy even for a dark, cold, Sunday afternoon.

Tech stack

Pretty standard Rust BE stuff:

[dependencies]

aws_lambda_events = { version = "1.0.0", default-features = false, features = ["eventbridge"] }

lambda_runtime = "1.0.1"

tokio = { version = "1", features = ["full"] }

reqwest = { version = "0.13.1", features = ["json"] }

serde = { version = "1.0.228", features = ["derive"] }

serde_json = "1.0.149"

teloxide = { version = "0.17.0", features = ["macros"] }

chrono = "0.4.42"

dotenv = "0.15.0"tokio is an amazing async runtime, ideal for web requests. reqwest makes it super easy to create an HTTP client and have fun with GET and POST. serde is a serialization/deserialization framework. serde_json build on top of serde to make it fun to play with JSONs. I wish all programming languages had those two.

The AWS Lambda runtime parts were kind of challenging. You need to wrap your code into handler functions, so that you get to interact with other AWS services and access to logging. If you just upload valid Rust code to a Lambda, it will work, but the Lambda will fail because it’s not correct according to the Lambda conventions. I had to look at many of those examples: https://github.com/aws/aws-lambda-rust-runtime/tree/main/examples

Let’s goooo 🚀

The coding itself was kind of fast. I did have to make sure I was getting the right return from the functions, and also what is AWS Lambda expecting - what kind of Result.

But yeah, I wired up the OpenAI key, easily created a bot using BotFather, and started playing with the prompts and results.

First of all, these web_search prompts are not cheap. In my case, one single prompt costs about 30 cents. Yeah, it’s nothing to run it once a week, but imagine how many times I ran this prompt while I was testing. Let’s just say it was an unprofitable evening, in terms of money. But hey - it’s all for fun, a beer nowadays is 3-4 euros. This was worth it.

Obviously, the results are non-deterministic. When I run a prompt, I get different results, even if I run them the same day and a minute apart. This is the inherent problem with AI. But in this case, it’s not important. I can fine-tune it in the future. For example, I can nudge it towards a sport, like darts or chess.

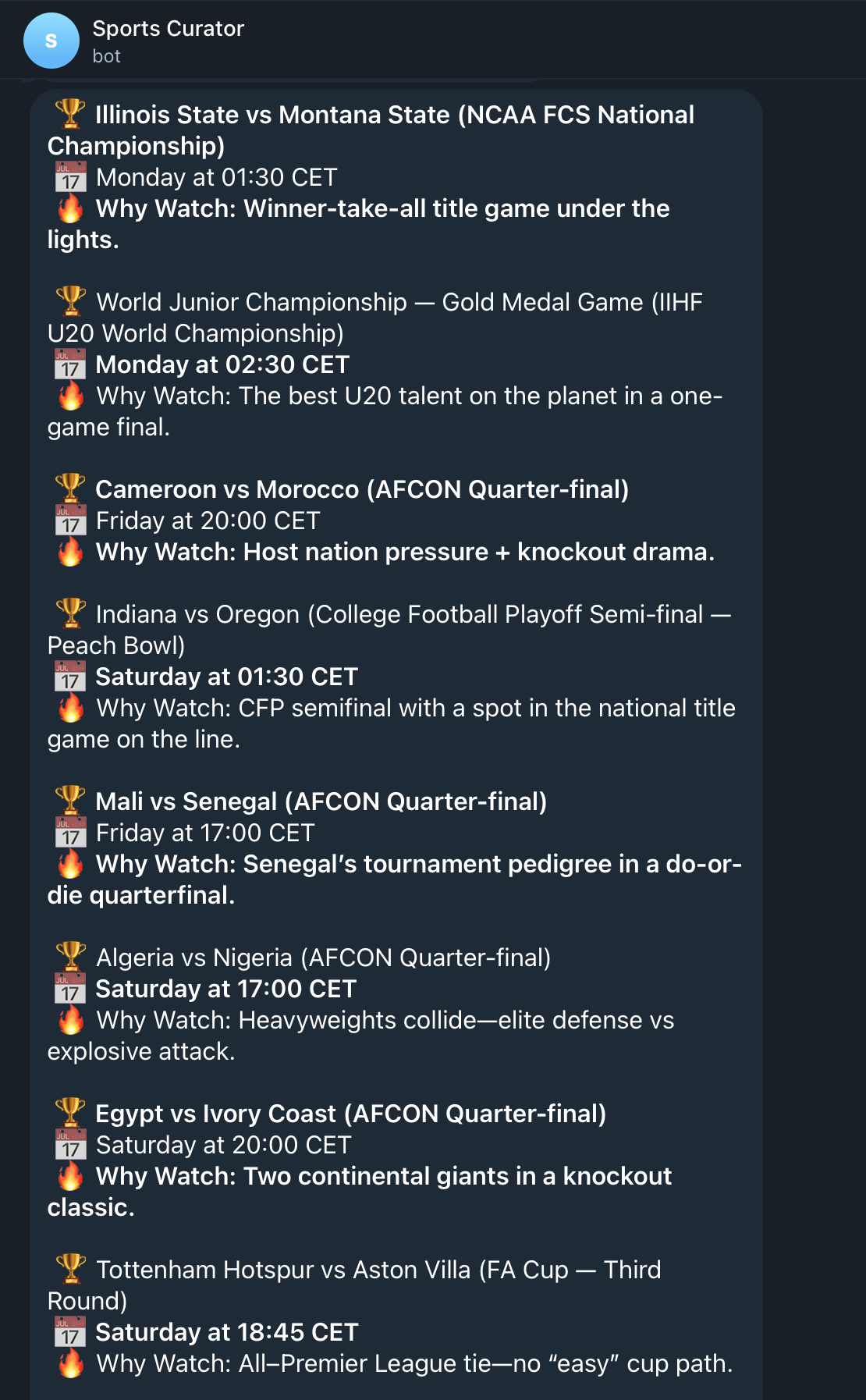

But the initial results were quite good - I got what I wanted, a curated weekly timetable of amazing sporting events to watch, all of them in my timezone.

I was happy. I went from idea to final result in a couple of hours.

Closing thoughts

I absolutely love coding in Rust, compared to JS. It’s a much stricter language, but one that makes you be more correct and actually think about what you are doing and why. It’s difficult to explain. I assume the C family of languages is similar, but in the end, JS is a scripting language that went too far.

I’m surprised to find so many awesome crates in Rust also. teloxide made the Telegram part easy, and I thought it was gonna be the hardest.

Formatting the OpenAI response to Markdown was challenging. I got a lot of emojis and special characters that broke teloxide’s Markdown parser. So to make it simple, I instructed the prompt to get me plain text, end of story. We want to make our life simpler.

One interesting thing I had to add was chunking. Apparently, Telegram has a character limit for how long a message can be (4096 characters to be exact). So at first, some messages failed because they were too long, and the whole thing was rejected. So I read a bit and found the solution to chunk (split) a long message in two. In the end, what we do is send n/4000 messages. This is fine because they come at the same time, and it is easy to read.

let mut current_chunk = String::new();

let mut current_len = 0;

for line in content.lines() {

// Because we are adding '\n'

let line_len = line.chars().count() + 1;

if !current_chunk.is_empty() && current_len + line_len > 4000 {

bot.send_message(recipient, ¤t_chunk).await?;

current_chunk.clear();

current_len = 0;

}

current_chunk.push_str(line);

current_chunk.push('\n');

current_len += line_len;

}

if !current_chunk.is_empty() {

bot.send_message(recipient, ¤t_chunk).await?;

}Here one of the things to look for, is that if we do String::len(), it gives us the length in bytes, not chars (reference). This is important because for example some emojis are 1 character, but 4 bytes. Since the Telegram API is counting characters, we also need to count characters to make sure we don’t go over the limit.

End result